I've seen many people complaining about it. Is it just beacuse of they are big or are they really worse... | Read the rest of https://ift.tt/2Nf1rZ5

from Web Hosting Talk - Web Hosting https://ift.tt/2Nf1rZ5

via https://ifttt.com/ IFTTT

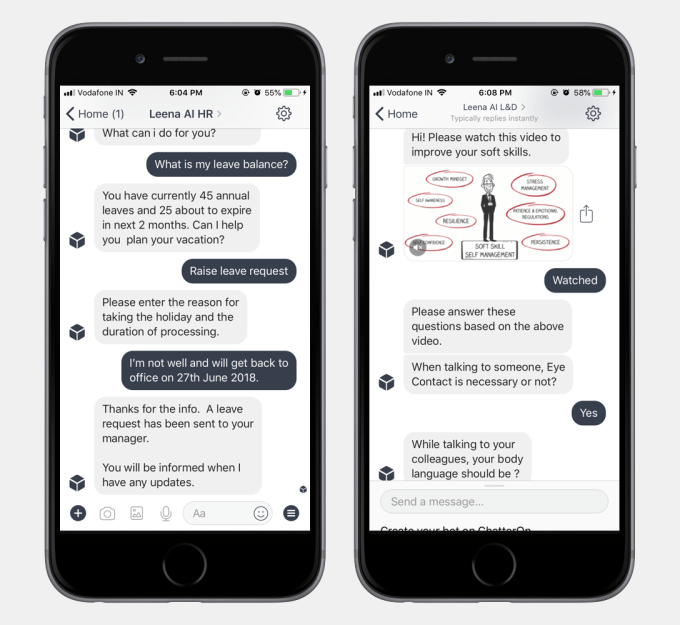

Say you have a job with a large company and you want to know how much vacation time you have left, or how to add your new baby to your healthcare. This usually involves emailing or calling HR and waiting for an answer, or it could even involve crossing multiple systems to get what you need.

Leena AI, a member of the Y Combinator Summer 2018 class, wants to change that by building HR bots to answer question for employees instantly.

The bots can be integrated into Slack or Workplace by Facebook and they are built and trained using information in policy documents and by pulling data from various back-end systems like Oracle and SAP.

Adit Jain, co-founder at Leena AI says the company has its roots in another startup called Chatteron, that the founders started after they got out of college in India in 2015. That product helped people build their own chatbots. Jain says along the way, they discovered while doing their market research, a particularly strong need in HR. They started Leena AI last year to address that specific requirement.

Jain says when building bots, the team learned through its experience with Chatteron, that it’s better to concentrate on a single subject because the underlying machine learning model gets better the more it’s used. “Once you create a bot, for it to really to add value and be [extremely] accurate, and for it to really go deep, it takes a lot of time and effort and that can only happen through verticalization,” Jain explained.

Photo: Leena AI

What’s more, as the founders have become more knowledgeable about the needs of HR, they have learned that 80 percent of the questions cover similar topics like vacation, sick time and expense reporting. They have also seen companies using similar back-end systems, so they can now build standard integrators for common applications like SAP, Oracle and Netsuite.

Of course, even though people may ask similar questions, the company may have unique terminology or people may ask the question in an unusual way. Jain says that’s where the natural language processing (NLP) comes in. The system can learn these variations over time as they build a larger database of possible queries.

The company just launched in 2017 and already has a dozen paying customers. They hope to double that number in just 60 days. Jain believes being part of Y Combinator should help in that regard. The partners are helping the team refine its pitch and making introductions to companies that could make use of this tool.

Their ultimate goal is nothing less than to be ubiquitous, to help bridge multiple legacy systems to provide answers seamlessly for employees to all their questions. If they can achieve that, they should be a successful company.

Earlier this month, the Wi-Fi Alliance made a press release announcing the availability of WPA3. Built on top of several existing but not widely deployed technologies, WPA3 makes several vast improvements over the security provided by WPA2. Most notably, WPA3 should close the door on offline dictionary-based password cracking attempts by leveraging a more modern […]… Read More

The post WPA3: What You Need To Know appeared first on The State of Security.

“If I was wantin’ to get there, I wouldn’t start from here.”

It’s an old Irish joke about the response you might get upon asking a local farmer for directions. It’s also a not uncommon position taken by architects of newly requested systems when they see the existing IT environment. Green-field builds are always their preference. Unfortunately, few green fields remain when it comes to building new IT systems, especially when the new system is broad in scope and must interact extensively with existing upstream and downstream applications.

Among new IT systems, those supporting a digital business are among the broadest and most complex imaginable. Furthermore, unless the business in question is a start-up, the digital business systems must work with existing systems in diverse ways: exchanging data with them, supplementing or complementing them, or even displacing some of them over time.

How should an architect even begin to think about designing such a system?

Over the history of IT, the system that has most in common with digital business is the data warehouse in its many and varied evolutionary guises. Shared characteristics include: a primary focus on data/information; a heavy dependence on completely independent, unpredictably changeable, and increasingly external upstream data sources; and poorly defined and highly changeable downstream business needs and applications.

The premise, therefore, of this issue of the Cutter Business Technology Journal with Guest Editor Barry Devlin is that a (successful) data warehouse is the best starting point for the organizational, architectural, and technological journey to becoming a digital business.

Topics may include, but are not limited to, the following:

• How does the architecture of a digital business compare to a data warehouse? What lessons can designers of digital businesses learn from existing data warehouse implementations, both successful and unsuccessful? What role would a data lake play? Where do real-time and near real-time concepts, such as the operational data store, apply and how would they need to change?

• What can a digital business learn from the organizational challenges of defining and managing the imprecise and changing requirements of business users? How can business value be proven? Can real, long-running data warehouse processes/projects show how to avoid “change management chaos” and the leaching of value generation from multi-year programs?

• What role can the BI (Business Intelligence) Center of Competence/Excellence play in the roll-out of a digital business? Which skills will need to be changed in the CoC/CoE?

• How will data governance/management be handled? What are the challenges of externally sourced data, especially the Internet of Things? Will data modelling, as practiced in data warehousing, need to change significantly and, if so, how?

• Which data warehouse technologies transfer directly to a digital business system? What changes would be required in ETL (extract-transform-load) systems? Will agile data warehouse automation tools better meet digital business needs? How important will data virtualization be? Where will today’s analytics adapt? What is the role of artificial intelligence?

Abstract Submissions due July 6, 2018. Please send article ideas to Christine Generali and Barry Devlin including an abstract and short article outline showing major discussion points. Accepted articles will be due August 1, 2018. Final article length is typically 2,000-3,500 words plus graphics. More editorial guidelines.

Cutter Business Technology Journal is published monthly as a forum for thought leaders, business practitioners and academics to present innovative ideas, current research and solutions to the critical issues facing business technology professionals in industries worldwide competing in today’s digital economy.

Learn more about Cutter Business Technology Journal including recently published issues!

LONDON (Reuters) – Facebook is continuing to be evasive in its answers to a British parliamentary committee examining a scandal over misuse of the social media company’s data by Cambridge Analytica, the committee’s chair said on Friday. Cambridge Analytica said

The post Facebook still evasive over Cambridge Analytica and fake news: UK lawmakers appeared first on CloudTweaks.

Multinational apparel design and manufacturing corporation Adidas alerted customers of an incident that possibly affected the security of their data. On 28 June, Adidas’ headquarters located in Herzogenaurach, Germany posted a statement about the incident to its website. The notice revealed that Adidas first learned about the issue two days earlier when an unauthorized party […]… Read More

The post Adidas Alerts Customers of Possible Data Security Incident appeared first on The State of Security.

Reducing infrastructure cost has traditionally been the driver for adopting IaaS cloud-hosting. For companies where capabilities are spread across several environments because of a decentralized business strategy or intensive M&A activities, reducing operational costs via cloud migration makes great sense. But what if your organization has already consolidated its environment? Is there still an argument for cloud migration? According to Cutter Consortium Senior Consultant Vittorio Cretella (who until retiring a few months ago was CIO of Mars, Inc.), there is.

In a recent Cutter Consortium Advisor, “The CIO and the Holistic Business Case for Cloud Migration,” Cretella explained:

For many companies that have already consolidated their data processing assets into fewer facilities, often simultaneously implementing storage and server virtualization, operational cost benefits are likely to be minimal. That was my experience with a consolidated environment where more than 80% of data processing capacity was run out of two global, redundant data centers with extensive use of virtualization (60% of 3,200 servers were virtual using VMWare). The key argument I used for cloud adoption was cost avoidance, which resulted from the closure of one of the two facilities — thus saving capital investment for renovations/upgrades and future growth.”

In addition, Cretella considered the additional cost savings that would come from using cloud infrastructure as a way of automating IT operations, which fit well with Mars’ ongoing initiative to automate processes in the I/O area. One of Cretella’s key decisions here was to include a “sunset strategy” for legacy applications that were not suitable for cloud migration at a reasonable cost.

Research: Read Vittorio Cretella’s full Advisor, “The CIO and the Holistic Business Case for Cloud Migration”.

Cutter clients can get insight into sustainable cloud options in Looking at All the Sides of Sustainable Cloud.

Upcoming Webinar! Discover 3 Ways to Waste Money on AWS (and How You Can Avoid Them). Register now to learn tips from Cutter Consortium’s Frank Contrepois on making the most of your AWS investment.

Consulting: Everyone wants to save money on the cloud. But how do you actually do it? With a Strategic Cloud Procurement Assessment from James Mitchell and the Cutter Consortium-Strategic Blue team, you’ll discover just what capacity you actually need, and where and how to procure it to get the most value from your cloud investment. This assessment is an invaluable tool for anyone responsible for buying cloud services, including heads of procurement, CTOs, CIOs, and VPs of Operations.

The detailed personal information of 230 million consumers, and 110 million business contacts - including phone numbers, addresses, dates of birth, estimated income, number of children, age and gender of children - exposed by careless security.

The post Hitherto unknown marketing firm exposed hundreds of millions of Americans’ data appeared first on The State of Security.

A new research report by financial giant UBS suggests that millennials are ditching the kitchen and instead opting for food delivery in a report titled “Is the Kitchen Dead.”

The report is not available to the public and we haven't seen it.

But it's nicely covered the Forbes article Millennials Are Ordering More Food Delivery, But Are They Killing The Kitchen, Too?

According to the article, time starved millennials in particular are cooking less because it's so easy to order food from platforms such as Uber Eats, Door Dash and Grub Hub.

Key quote:

UBS forecast delivery sales could rise an annual average of more than 20% to $365 billion worldwide by 2030, from $35 billion in 2017.

UBS is even suggesting this forecast could be low, saying:

"There could be a scenario where by 2030 most meals currently cooked at home are instead ordered online and delivered from either restaurants or central kitchens.”

An American restaurant chain revealed it suffered a data breach affecting customers’ payment card details at most of its locations. On 22 June, PDQ issued a statement explaining that a malicious attacker obtained unauthorized access to its computer system and acquired the names, credit card numbers, expiration dates and cardholder verification value (CVV) of some […]… Read More

The post Restaurant Chain Struck by Payment Card Data Breach appeared first on The State of Security.

During our first release of findings from the SentinelOne Global Ransomware Report 2018, we highlighted key findings as to why organizations felt they were the victim of a ransomware attack, how confident they are in defending against future attacks, and why.

The post Global Ransomware Awareness Report 2018 appeared first on CloudTweaks.

For the last five years, we’ve been discussing the future of AI with many organizations, including Intel, at the forefront of that conversation. However, while ensuring the world gets to experience new heights and unparalleled technologies, organizations failed to notice

The post The Future of AI is Here appeared first on CloudTweaks.

People in UK who bought tickets since February told to be wary of suspicious activity UK customers of Ticketmaster have been warned they could be at risk of fraud or identity theft after the global ticketing group revealed a major

The post Identity theft warning after major data breach at Ticketmaster appeared first on CloudTweaks.

Microsoft today launched two new Azure regions in China. These new regions, China North 2 in Beijing and China East 2 in Shanghai, are now generally available and will complement the existing two regions Microsoft operates in the country (with the help of its local partner, 21Vianet).

As the first international cloud provider in China when it launched its first region there in 2014, Microsoft has seen rapid growth in the region and there is clearly demand for its services there. Unsurprisingly, many of Microsoft’s customers in China are other multinationals that are already betting on Azure for their cloud strategy. These include the likes of Adobe, Coke, Costco, Daimler, Ford, Nuance, P&G, Toyota and BMW.

In addition to the new China regions, Microsoft also today launched a new availability zone for its region in the Netherlands. While availability zones have long been standard among the big cloud providers, Azure only launched this feature — which divides a region into multiple independent zones — into general availability earlier this year. The regions in the Netherlands, Paris and Iowa now offer this additional safeguard against downtime, with others to follow soon.

In other Azure news, Microsoft also today announced that Azure IoT Edge is now generally available. In addition, Microsoft announced the second generation of its Azure Data Lake Storage service, which is now in preview, and some updates to the Azure Data Factory, which now includes a web-based user interface for building and managing data pipelines.

For any company built on top of machine learning operations, the more data they have, the better they are off — as long as they can keep it all under control. But as more and more information pours in from disparate sources, gets logged in obscure databases and is generally hard (or slow) to query, the process of getting that all into one neat place where a data scientist can actually start running the statistics is quickly running into one of machine learning’s biggest bottlenecks.

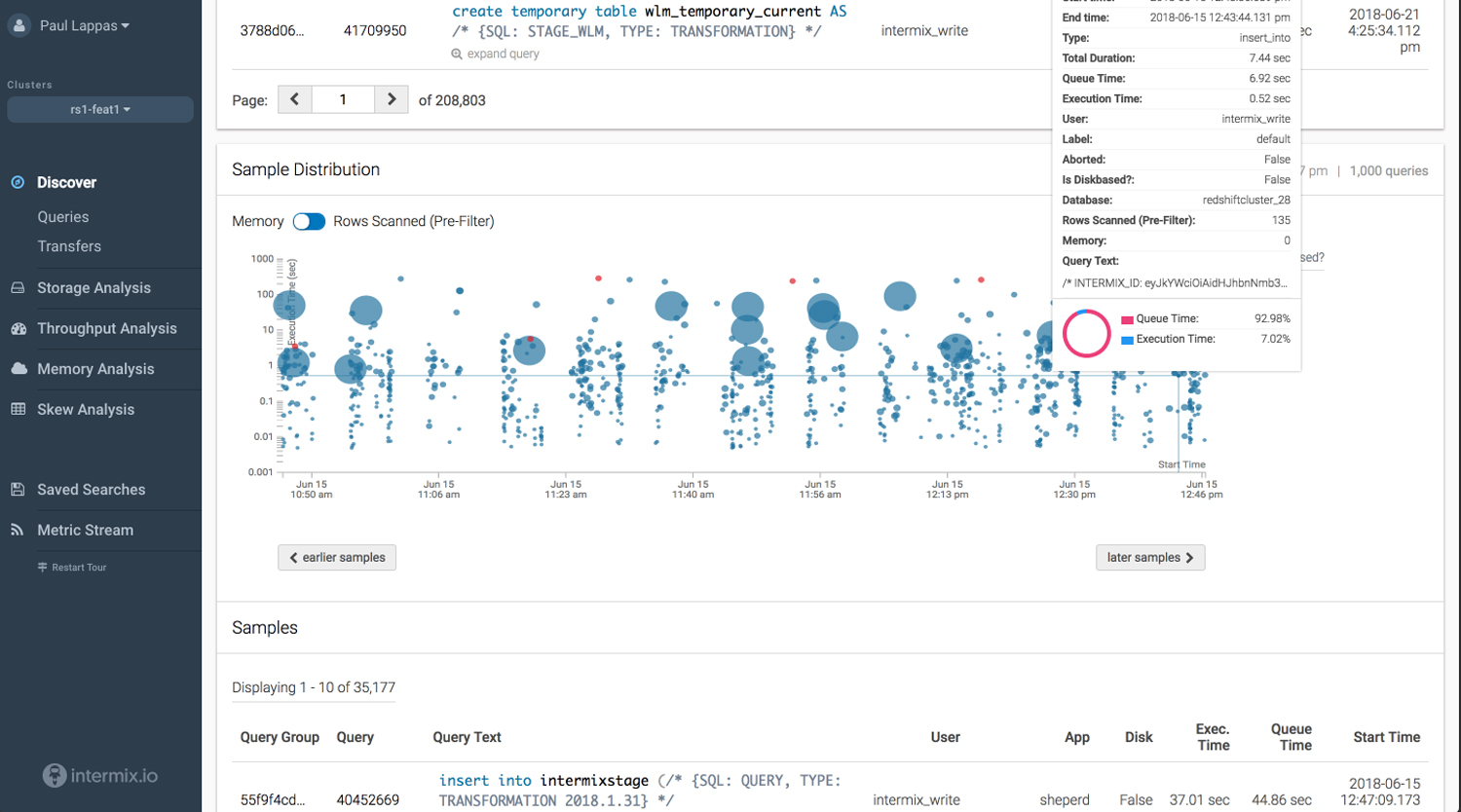

That’s a problem Intermix.io and its founders, Paul Lappas and Lars Kamp, hope to solve. Engineers get a granular look at all of the different nuances behind what’s happening with some specific function, from the query all the way through all of the paths it’s taking to get to its end result. The end product is one that helps data engineers monitor the flow of information going through their systems, regardless of the source, to isolate bottlenecks early and see where processes are breaking down. The company also said it has raised seed funding from Uncork Capital, S28 Capital, PAUA Ventures along with Bastian Lehman, CEO of Postmates, and Hasso Plattner, Founder of SAP.

“Companies realize being data driven is a key to success,” Kamp said. “The cloud makes it cheap and easy to store your data forever, machine learning libraries are making things easy to digest. But a company that wants to be data driven wants to hire a data scientist. This is the wrong first hire. To do that they need access to all the relevant data, and have it be complete and clean. That falls to data engineers who need to build data assembly lines where they are creating meaningful types to get data usable to the data scientist. That’s who we serve.”

Intermix.io works in a couple of ways: first, it tags all of that data, giving the service a meta-layer of understanding what does what, and where it goes; second, it taps every input in order to gather metrics on performance and help identify those potential bottlenecks; and lastly, it’s able to track that performance all the way from the query to the thing that ends up on a dashboard somewhere. The idea here is that if, say, some server is about to run out of space somewhere or is showing some performance degradation, that’s going to start showing up in the performance of the actual operations pretty quickly — and needs to be addressed.

All of this is an efficiency play that might not seem to make sense at a smaller scale. the waterfall of new devices that come online every day, as well as more and more ways of understanding how people use tools online, even the smallest companies can quickly start building massive data sets. And if that company’s business depends on some machine learning happening in the background, that means it’s dependent on all that training and tracking happening as quickly and smoothly as possible, with any hiccups leading to real-term repercussions for its own business.

Intermix.io isn’t the first company to try to create some application performance management software. There are others like Data Dog and New Relic, though Lappas says that the primary competition from them comes in the form of traditional APM software with some additional scripts tacked on. However, data flows are a different layer altogether, which means they require a more unique and custom approach to addressing that problem.

The NBA and NHL finals have just finished up. We just passed the Summer Solstice (for the Northern hemisphere). The 2018 World Cup is underway and will reach the knockout stage by the end of June. And all the while, …

During my recent visit to my hometown of Delhi, I was in charge of the arrangements for my parents’ twenty-fifth wedding anniversary. As part of the planning, I used Receiver for Linux to run multiple sessions on multiple desktops, to …

Well, guess we should have known better. A.I. can play Jeopardy, Chess and Go but it seems their capabilities have topped out. Let’s talk about a new A.I. “winter”. A.I. was going to take your job – guess again. A.I.

The post That’s It? After All the Hype and Fear About A.I.? Turns Out They Can’t Do the Hard Stuff appeared first on CloudTweaks.

A Japanese hotel chain company notified approximately 125,000 guests about a data breach that affected one of its software providers. On 26 June, Prince Hotels & Resorts published a statement on its website explaining that its reservations system in English, Simplified Chinese, Traditional Chinese and Korean was affected by an instance of unauthorized access. The […]… Read More

The post Japanese Hotel Chain Notifies 125K Guests of Software Provider Breach appeared first on The State of Security.

Shared Autonomous Vehicles Will Reduce the Number of Cars and Overall Travel Times in Cities, But Potentially Worsen Traffic and Increase Travel Times an Downtown Areas, a World Economic Forum/BCG Study Shows NEW YORK, June 27, 2018 (GLOBE NEWSWIRE) —

The post Autonomous Vehicles Will Further Clog City Centers Unless Lawmakers Step Up, Boston Study Finds appeared first on CloudTweaks.

The rapid pace of technology innovation and applications in recent decades — you could argue that just about every kind of business is a “tech” business these days — has spawned a sea of tech startups and larger businesses that are focused on serving that market, and equally demanding consumers, on a daily basis. Today, a venture capital firm in the UK is announcing a fund aimed at helping to grow the technologies that will underpin a lot of those daily applications.

Cambridge-based IQ Capital is raising £125 million ($165 million) that it will use specifically to back UK startups that are building “deep tech” — the layer of research and development, and potentially commercialised technology, that is considered foundational to how a lot of technology will work in the years and decades to come. So far, some £92 million has been secured, and partner Kerry Baldwin said that the rest is coming “without question” — pointing to strong demand.

There was a time when it was more challenging to raise money for very early stage companies working at the cusp of new technologies, even more so in smaller tech ecosystems like the UK’s. As Ed Stacey, another partner in the firm acknowledges, there is often a very high risk of failure at even more stages of the process, with the tech in some cases not even fully developed, let alone rolled out to see what kind of commercial interest there might be in the product.

However, there has been a clear shift in the last several years.

There a lot more money floating around in tech these days — so much so that it’s created a stronger demand for projects to invest in. (Another consequence of that is that when you do get a promising startup, funds are potentially giving them hundreds of millions and causing other disruptions in how they grow and exit, which is another story…)

And while there are definitely a lot of startups out there in the world today, a lot of them are what you might describe as “me too”, or at least making something that is easily replicated by another startup, making the returns and the wins harder to find among them.

A new focus that we are seeing on “deep tech” is a consequence of both of those trends.

“The low-hanging fruit has been discovered… Shallow tech is a solved problem,” Stacey said, in reference to areas like the basics of e-commerce services and mobile apps. “These are easy to build with open source components, for example. It’s shallow when it can be copied very quickly.”

In contrast, deep tech is “by definition is something that can’t easily be copied,” he continued. “The underlying algorithm is deep, with computational complexity.”

But the challenges run deep in deep tech: not only might a product or technology never come together, or find a customer, but it might face problems scaling if it does take off. IQ Capital’s focus on deep tech is coupled with the company trying to determine which ideas will scale, not just work or find a customer. As we see more deep tech companies emerging and growing, I’m guessing scalability will become an ever more prominent factor in deciding whether a startup gets backing.

IQ Capital’s investments to date span areas like security (Privitar), marketing tech (Grapeshot, which was acquired by Oracle earlier this year), AI (such as speech recognition API developer Speechmatics) and biotechnology (Fluidic Analytics, which measures protein concentrations), all areas that will be the focus of this fund, along with IoT and other emerging technologies and gaps in the current market.

IQ Capital is not the only fund starting to focus on deep tech, nor is its portfolio the only range of startups focusing on this (Allegro.AI and deep-learning chipmaker Hailo are others, to name just two).

LPs in this latest fund include family offices, wealth managers, tech entrepreneurs and CEOs from IQ’s previous investments, as well as British Business Investments, the commercial arm of the British Business Bank, the firm said.

MANILA, June 28th, 2018 — According to the latest IDC Asia / Pacific, excluding Japan (APeJ) Semiannual Services Tracker, total outsourcing services revenues in the Philippines exceeded US$300 million for the full-year 2017, with 8.2% year-over-year growth (in constant currency). IDC’s key

The post IDC: Growing Preference for Cloud Services to Affect the IT Outsourcing Market in the Philippines appeared first on CloudTweaks.

The U.S. Census recently released the 2016 nonemployer business statistics. Key quote from their press release:

... nonemployer establishments increased 2.0 percent from 24,331,403 in 2015 to 24,813,048 in 2016. Receipts increased 1.5 percent from $1.15 trillion in 2015 to $1.17 trillion in 2016.

This continues a pattern of about 2% growth per year in the number of nonemployer businesses over the past couple of decades. Since 2003, for example, the number of nonemployer businesses has increased by about 5.7 million.

Nonemployer businesses are businesses that have a business owner, but no traditional full or part-time (W2) employees. The average nonemployer business is small and likely a part-time business - the median nonemployer falls into the $10,000 to $24,999 gross receipts bracket.

But one of the fastest growing segments of nonemployers are those reporting $100k or more in gross receipts. As the chart below shows, this segment grew from 2.2 million in 2010 to about 2.8 million in 2016, or about 27%. This is almost twice the overall growth rate for nonemployer businesses during this period.

This data echos the findings from the MBO Partners State of Independence study series, which also shows strong growth in the number of independent workers reporting earnings of $100k or more.

The strong economy is creating more opportunities for solopreneurs of all kinds - and especially highly skilled solopreneurs - to win more business and also increase their prices. We expect to see more growth in nonemployers in general and the high earning segment in particular.

Emergent Research (that's us) contributes to the MBO Partners State of Independence study series.